Transparent, predictable, high‑performance semantic message routing for AI applications

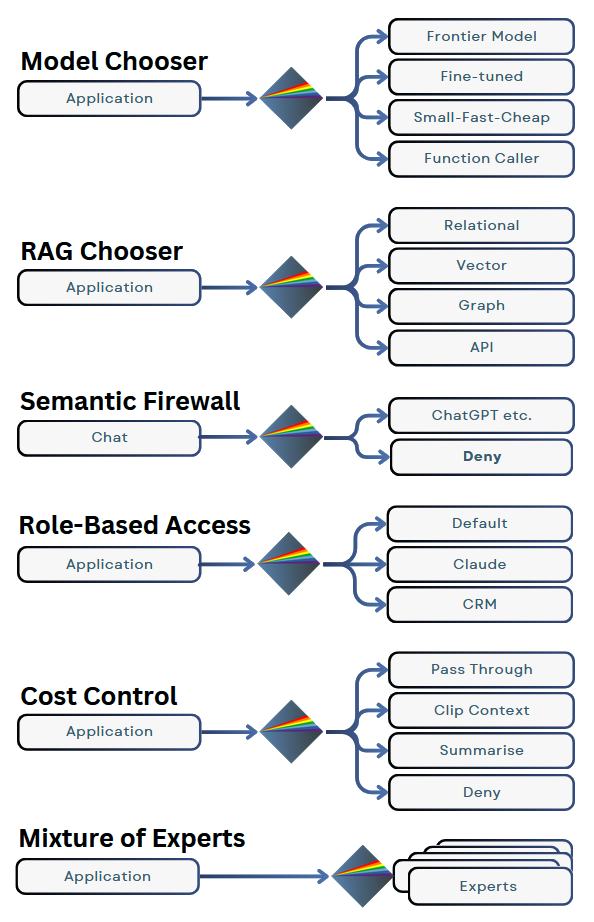

langpath is a semantic router. It lets you control the path that AI information takes through your application or through your infrastructure. It’s like a mail sorting centre for AI prompts and outputs.

Use langpath to reduce LLM costs (and their carbon footprint), protect private and customer data, avoid reputation damage and improve customer experience.

langpath is an LLMOps component for use in LLM-based applications. It simplifies orchestration of your AI applications - whether you use LangChain, LlamaIndex, Semantic Kernel or one of the many Enterprise and Cloud orchestration frameworks. langpath understands the meaning and intent of messages, so it lets you centralise and formalise how that meaning is handled by your applications.

Your langpath Router is cloud software (SaaS) with a generous free plan. Enterprise users can run multiple Router instances in their own private cloud. They can also orchestrate, configure, manage and report on these instances using the langpath Conductor suite of tools.

Get a Demo

Under the Hood

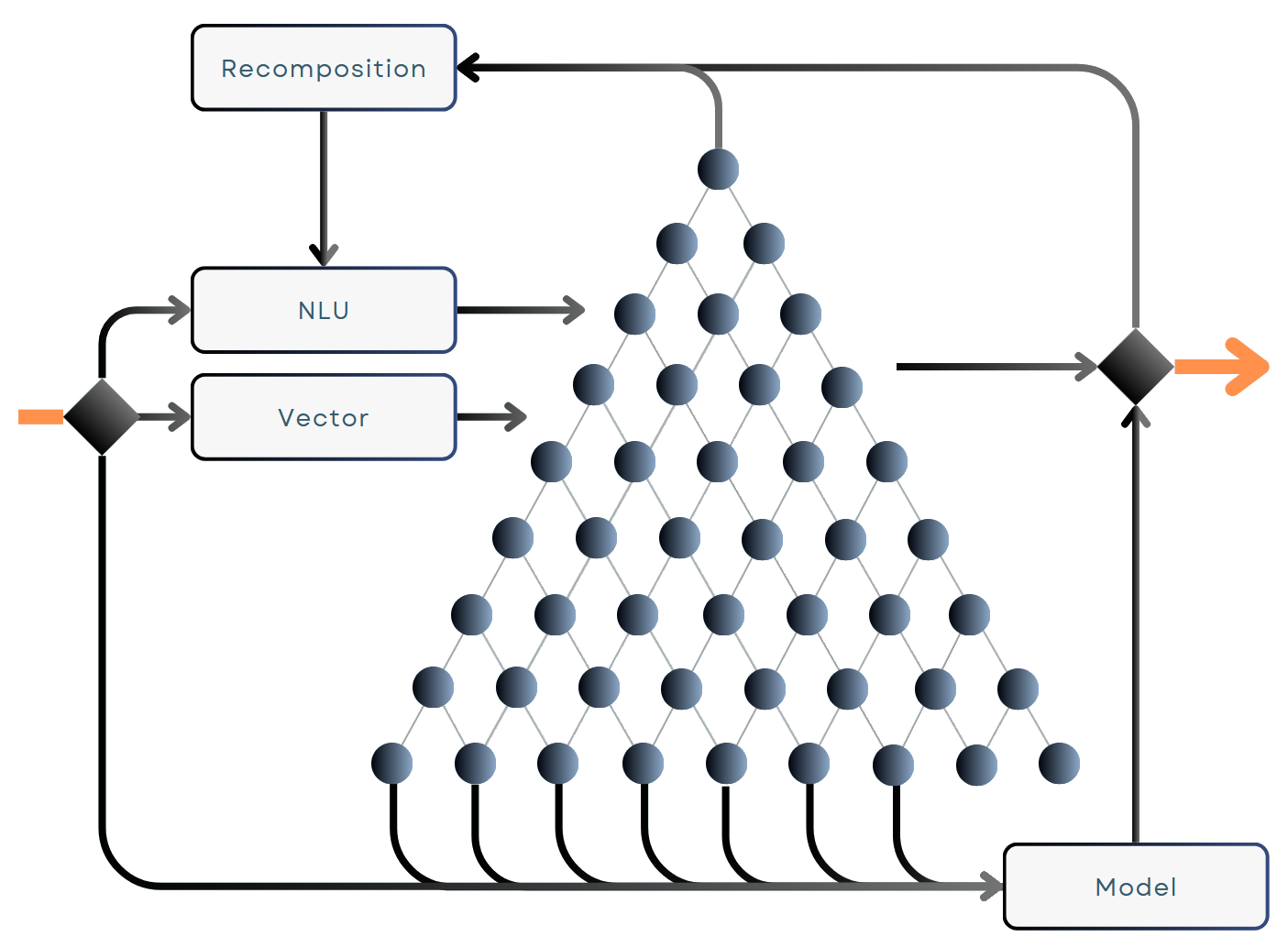

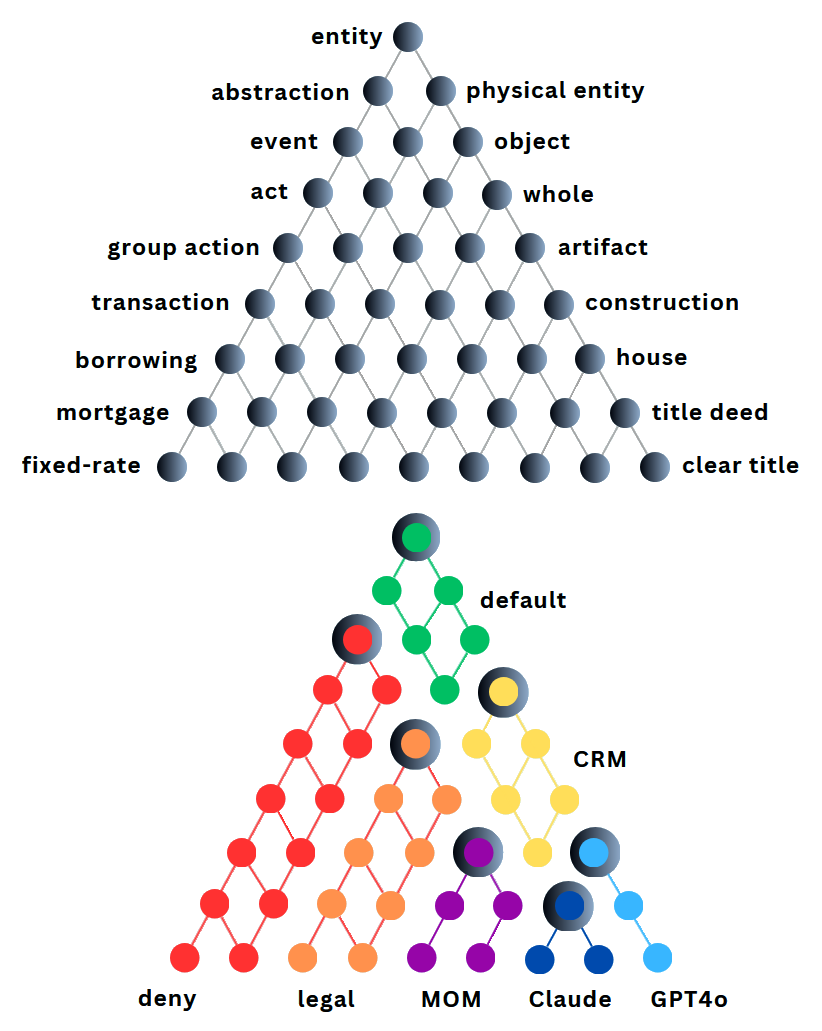

The core of langpath is a descriptive knowledge graph or ontology. This graph contains more than 100,000 connected concepts that cover just about everything that humans talk about and use to describe their world.

More than one million words can be used to search this graph and the connections in the graph allow Natural Language Understanding (NLU) logic to be applied during that search.

This NLU-based search is highly effective. It’s transparent, predictable, fast and computationally inexpensive. To make it even more effective, each concept carries a precisely-worded description that allows a Vector search (Embeddings) to augment the NLU result.

langpath doesn’t stop there, however. If it’s not confident in the concepts it’s mapped to an incoming message, it can use a plugged-in language model to recompose the input - using a controlled vocabulary collected from the knowledge graph.

These strategies work well for prompts and smaller inputs, but they’re not currently practical for large bodies of text. langpath allows a Classifier or Language Model to be plugged in and trained to handle these large contexts. Training uses synthetic data generated from the configured knowledge graph.

From Concepts to Paths

Configuring langpath is as easy as selecting blocks from its knowledge graph of 100,000+ connected concepts. Your selected block describes a “semantic scope” that will be applied to prompts and other data sent to the langpath Router. Select each block by choosing one or more parent concepts.

You’ll then assign a path and a priority to the block of concepts. Input that matches the block will be passed to the model, RAG store, API, middleware or other component that you specify.

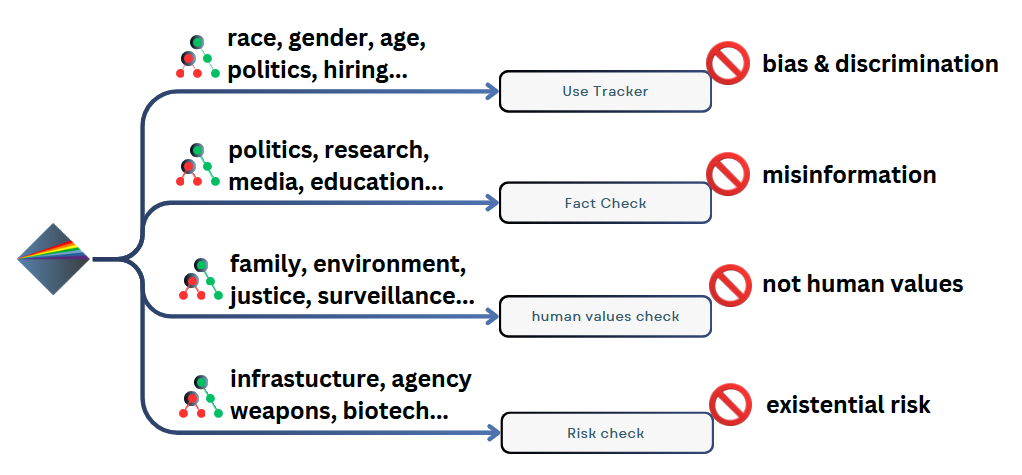

AI Safety is in Your Hands

Language Models are trained on enormous quantities of text obtained from a wide variety of sources. These sources – and consequently the models - contain biases and misinformation, and they don’t always reflect values we’d want to call our own.

The larger language models also demonstrate “emergent” properties – unexpected capabilities and behaviours they weren’t trained for. Now these models are even being used to power “agents” – applications that can act in the real world without human supervision.

We have the power, however, to make our own AI applications safe. We control the gateways that provide access to unsafe models. With langpath in our applications and our infrastructure we can recognise risky prompts and outputs and route them appropriately.

Frequently Asked Questions

Each langpath Router path choice is logged by default and those logs can be retrieved using the Router's API. Each path can be also be configured to send notifications using a webhook.

The Enterprise Conductor suite also lets you send notifications and guidance to users using a back-channel that you choose. The simplest back-channel is a chatbot plugin (widget). This plugin can be added automatically to the browser-based AI applications your team uses, or added to your own AI-focused Knowledge Base. Alternatively, you can choose Slack, Teams or Discord as a back-channel.

Yes. The langpath Conductor suite for enterprise lets you configure as many role-based paths as you need. You define and connect these roles (and the various AI providers) in your Governance Graph.

Large "Frontier" large models are usually the most capable, but they're also the most expensive to use. Smaller models can be just as effective for certain tasks - such as classification and RAG-based question answering. Fine-tuned smaller models can perform just as well as large models if their use is suitably constrained.

langpath lets you direct your AI work to the most cost-effective model. You can also block tasks that aren't approved and work-related, and route others to better-suited traditional or legacy applications.

Take a look at CAT3. It's a langpath-powered virtual appliance that sits between your team and the thousands of AI products they need to experiment with and use if your enterprise wants to get ahead of the pack. CAT3 intercepts, checks, blocks if necessary, and then provides guidance for any AI application used from within your Enterprise firewall or via your Zero Trust gateway. See cat3.io.

Yes. Use the langpath Router to make sure that your visitors only use your service as intended. You'll get safe-use and prompt-injection protection out-of-the-box.

Probably not. Language models and classifiers are not deterministic, they're black boxes - so you'll never be sure about their decisions. They also have to be trained - on text that exactly represents the decisions that need to be made. And you'll need to retrain the model every time you make a change to your path decisions.

What's more, such models can already contribute to your langpath Router's decision process. The Conductor suite for Enterprise allows you to generate synthetic data from your knowledge graph based on your path selections. You can use that synthetic data to train a classifier or language model. Conductor then lets you plug that model into your Router instance..